07:30 -

© Shutterstock

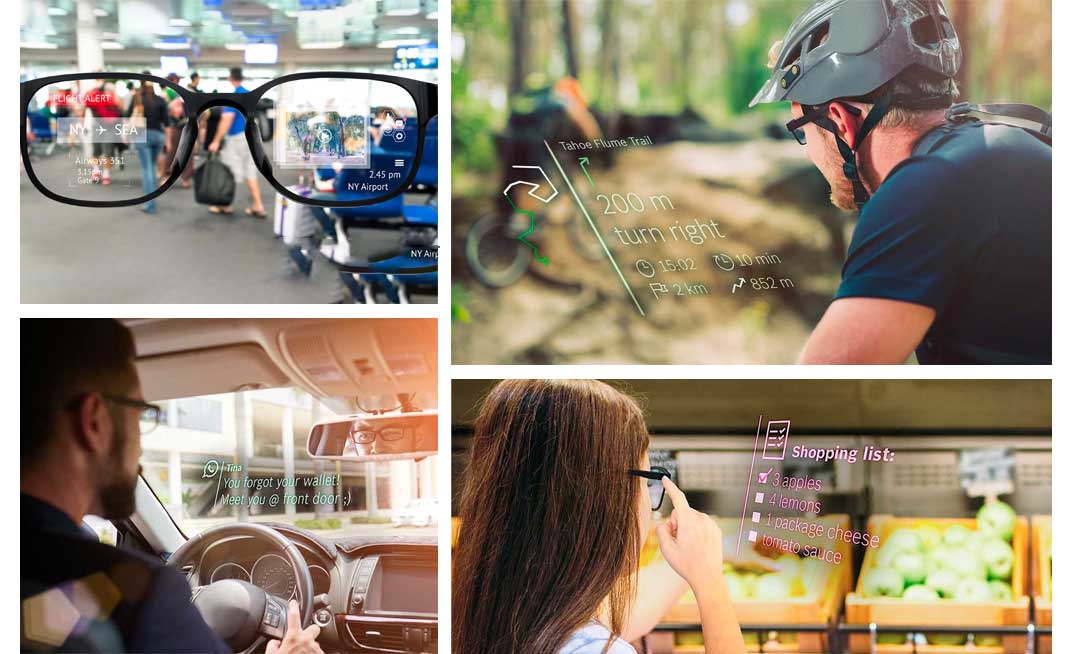

The first connected glasses designed for the public were hardly a roaring success, but for the last few years, the big names in the digital world have been busy preparing their comeback. In this, the second part of our report on the smart glasses of the future, we have tried to imagine what the next steps in technology could look like, with new interactive and proactive features, and how they will be able to do all this in real time.

Just like the virtual assistants that already take care of our daily lives – Siri and Ok Google – the glasses of the future will answer our questions and give us instructions, but unlike what we already know, they will anticipate our needs and to them using artificial intelligence (AI). Processing enough information to resemble a form of ‘real’, human intelligence, artificial intelligence will work using three processes that are already present in our smartphones.

The first is the CPU (the Central Processing Unit), the powerful calculator in every computer that will process the sums needed for AI. The second is the Cloud, already used by our virtual assistants, harnessing the power of remote servers to make the necessary calculations. Finally there’s the NPU (Neural Processing Unit), the beating heart of more modern devices, whether that’s Apple’s Bionic A13, Samsung’s Exynos 9820, Hauwei’s Kirin 810 or Intel’s NNP. Around 25 times more powerful than a processor without an NPU, this core is entirely dedicated to artificial intelligence, capable of recognising things and making decisions. It’s thanks to the NPU, for example, that some modern cameras are able to suggest specific settings based on its surroundings and the Google Lens can recognise the exact breed of dog in a photo.

© Techjuice

Technology manufacturers might use the term ‘intelligence’ a bit haphazardly, but in reality there are two distinct types of artificial intelligence. The first is termed ‘weak’ and reproduces a specific behaviour without really understanding how or why. In other words, it seeks to imitate as closely as possible a behaviour that was designed in advance, but it is not capable of improvising. These systems are specialised in one given area and are limited to specific tasks and types of activity and they’ve already been around for a while in search engines, navigation systems, personalised recommendations on websites and apps, video games, translation services and the virtual assistants in our smartphones and other connected devices.

So the main difference that will mark out the glasses of the future is a much more sophisticated form of artificial intelligence. Not only will this form be able to reproduce specific behaviours, but it will be able to make predictions, judgements and anticipate the future. Fed with more and more data, associated with ever more effective AI-dedicated technologies and powered by more and more powerful algorithms, the virtual assistants that will live inside the glasses of the future will know the habits of their wearer by heart, and will be able to identify and anticipate their needs, quickly making themselves indispensable.

© Martin Hajek, Apple

© Microsoft

© Shutterstock

To be intelligent, the glasses of the future will need to be part of an interconnected ecosystem*. It’s what is called the Internet of Things (IoT). Put simply, this is a worldwide infrastructure where billions of everyday objects are connected and so able to talk to each other. While experts in the Internet of Things estimate that by 2025 75 billion objects will have joined the network, they also estimate that just 1% of this technology’s potential is currently being used.

This network of interconnected objects will be made possible in large part with the new availability of 5G as well as improvements to signals like Bluetooth and Wifi and the ever expanding low-power wide-area networks. Integrated in an exponentially growing number of daily objects, these types of network can already be found in Linky electrical meters and street cars.

The Internet of Things is an essential cog in the digital ecosystem of the future. It is this that will allow a vast quantity of data to be collected and shared in real time. Whether connected to cloud computing or not, the data collected will constantly be fed into servers, supercalculators and dedicated processors that analyse and and enrich them. The glasses of the future will be able to access all the information sent by other objects in the network, and so will find themselves at the heart of our digital arsenal and totally interdependent with the Internet of Things.

While augmented reality works by superimposing computer-generated visuals onto the real environment in real time, allowing us to visualise virtual objects in the real world, mixed reality takes it one step further, allowing us to actually interact with these virtual elements. In the glasses world, virtual images appearing on lenses are already a reality thanks to head-up display. We’ve already discussed augmented reality swimming goggles and we could also talk about French labele Julbo which is preparing to launch augmented reality glasses for sportspeople using this type of technology.

We can look at Snapchat and Instagram filters as a ‘basic’ form of augmented reality. It’s one that most people know and use for fun. There was also the phenomenal success of Pokemon Go, the smartphone game that has been downloaded more than a billion times since its launch in 2016 and was the first platform to shine a spotlight on mixed reality, superimposing interactive virtual elements on a real, geolocalized environment. One a side note, Niantic, the company behind the project, has since announced a partnership with American microchip giant Qualcomm to help realise their own mixed reality glasses.

While for the majority of people, mixed and augmented reality are there for fun, this technology was first intended for the professional and educational sectors. Having divested from the private market several years ago, Google and Microsoft have been refining and innovating to appeal to a professional market with the Google Glass Enterprise Edition and Microsoft HoloLens. At the cutting edge of this sector, Microsoft has a range of innovative technologies for maintenance solutions and for studying objects, machines or even a whole environment. Many architects, engineers and creators now use these tools to create 3D mixed reality renderings of a future project to help them improve the look, the layout and how it will work in practice. There’s no doubt that all this growing knowledge as well as advances in software and hardware in the professional industry will find a way into the world’s smart glasses of the future.

There are already several good examples of mobile apps inspired by those made by Microsoft and Google for the professional world. There’s Ikea Place and Amazon which allow you to place objects virtually in a space to see how they will fit in your home before you buy them. Then there are virtual visit apps which have exploded in the worlds of estate agents, cultural sites and navigation, as well as the new Live View function in Google Maps which overlays directions onto a camera image of the surroundings, something that could be integrated into future connected glasses.

Armed with depth perception that can render an environment in 3D and stereoscopic screens that create the illusion of perspective, these glasses will be endowed with movement detectors that will allow them to read where we place our hands and fingers accurately. As well as microphones, they will have systems that can follow and analyse eye movements (like where they are looking, how fast they move, the order they look at things in and how long for). The user will thus be able to interact in real time with a virtual space that will blend more and more effectively with the real world, and more permanently, simply thanks to our hand movements, eye movements or voice.

© Bosch

Not only will glasses in the future adjust to the light, they will also be able to improve our sight of any visible area or even display, when asked, fields of vision that can’t be seen by our eyes thanks to integrated cameras on the back and sides of the arms.

And with built-in light detectors, the tint of the lenses will adjust automatically to the amount of UV present in sunlight. The more intense the light, the more the lenses will darken. When the wearer is plunged into complete darkness, their glasses will let them see plain as day using night vision powered by artificial intelligence.

Just like photochromic lenses and their photosensitive layer, the lenses will become transparent again when the light goes back to normal, but while the old design takes several seconds to react to the change in light, glasses in the future will change instantly.

Able to react in real time, these glasses will expand our field of vision to 360 degrees or even zoom in on a specific zone, isolate it, render it more clean and event retouch it in order to share or send to our friends. Artificial intelligence used together with geolocalisation will be able to provide information and relevant suggestions based on both a location and the wearer’s profile, displaying the information with text and virtual elements.

© Microsoft

As well as the many ergonomic, technologic and psychological problems they had, the commercial failure of the first connected glasses was just as much due to their appearance. The Google Glass was built with a huge titanium arch and an imposing plastic piece that sat above the right eye to hold the camera and mini projector, a design choice which made the result very unaesthetic and unbalanced, and gave the user the look of a cyborg. People coming into contact with them were left feeling curious, sceptical, at best mocking and at worse suspicious. The disharmonious design was also let down by the weight, closer to a virtual reality headset than a pair of everyday glasses, and was totally unbalanced which quickly made them uncomfortable.

But out from the ashes of these failures came learnings: we know now that the glasses of the future will need to be lighter, more comfortable and more discreet than their predecessors if they want to convince the public. Bone conduction, something we’ve already talked about, will allow them to be more discreet, transmitting sound through vibrations inside the ear. Built into the arms of the glasses, this technology is invisible when the glasses are worn, more discreet than audio devices like headphones and speakers, and doesn’t block out the sound of the exterior.

These glasses will only be able to be light and comfortable as components get smaller, taking advantage of technological innovation and discoveries in biomimetics. Since the 1990s, a newer technology is starting to take hold and has already been at the origin of many important innovations. It is called biomimetics and takes inspiration from naturally-occuring systems, shapes and materials for its designs. Smaller, more reliable and longer-lasting batteries have been made possible thanks to natural algae and graphene, microscopic cameras have taken inspiration from the fovea of eagles and been made more effective thanks to studies into bees’ eyes. So too will the glasses of the future take inspiration from nature.

Joël de Rosnay also considers today’s materials to be “completely out of date compared to the materials of the future, and will be taken over most notably by those referred to as biomaterials. Glasses today are made from glass and plastic, but with biomaterials, we are heading towards ones made from proteins, fats, collagen and even sugar. These biological materials will be modifiable at will and that will change everything”. Though it is not easy to predict when these biomaterials will replace conventional ones, one thing is certain: the glasses of the future will need to find a compromise between all the myriad technologies and the components they carry, the overall aesthetic and comfort in order to convince the public.

Article created in partnership with Jaw Studio and Joël de Rosnay, Special Advisor to the President of the Universcience.

Joël de Rosnay is a biologist, writer and expert in future studies. A Doctor of Science, he is Special Advisor to the President of the Universcience (Cité des Sciences et de l’Industrie de la Villette et Palais de la Découverte) and President of Biotics International.

After a research and teaching position at the Massachusetts Institute of Technology (MIT) in the field of biology and computing, he was then made Scientific Attaché to the French Embassy in the United States, and Scientific Director of European Enterprises Development Company (a venture capital group) and Director of Research Applications at the Pasteur Institute in Paris.

Joël de Rosnay has also authored several scientific articles for the public including ‘Le Macroscope’ (1975), ‘L’homme symbiotique, regard sur le troisième millénaire’, Seuil, (1995), ‘La révolte du pronétariat’, Fayard, (2002), ‘2020 les scénarios du futur’, Fayard, (2008), ‘Et l’Homme créa la vie : la folle aventure des architectes et des bricoleurs du vivant’, avec Fabrice Papillon, Éditions LLL, (2010), ‘Surfer la Vie’, éditions LLL, (2012), and ‘Je cherche à comprendre…les codes cachés de la nature’ chez LLL, (2016). His latest article “La symphonie du vivant : comment l’épigénétique va changer votre vie” was published by (LLL) in 2018.